The Boise boom: How mapmakers keep on top of boomtowns

&w=256&q=90)

The world is changing by the minute. And, thanks to localized economic development and sudden population growth, some places are changing faster than others. Keeping up with that change isn’t easy.

The emergence of boomtowns — a town that experiences rapid population and economic growth — around the world poses an ever-evolving challenge to mapmakers who aim for their maps to reflect the real world as closely as possible and keep them fresh and accurate.

Boise, located on the Boise River in southwestern Idaho, USA, is one such town. Labelled the fastest growing city in the US in 2018 by Forbes, the capital of Idaho has been drawing in tens of thousands of new residents each year due to its affordable cost of living, desirable work-life balance and proximity to nature. The boom intensified with the COVID-19 pandemic as remote working became the norm and the allure of the fast-paced big city life began to dwindle.

Drawing tens of thousands of new residents every year, Boise, Idaho, has emerged as one of the fastest growing cities in the USA.

The need to make the city more livable for its new inhabitants led to development of all kinds of new facilities, be it housing, schools, hospitals or entertainment venues. Naturally, growing cities also require an expansion of road networks to connect the growing population.

One of the first considerations to make when finding a place to live in a new city is how accessible local amenities like schools, supermarkets, public transport and healthcare centers are. Without accurate and up-to-date digital maps, acquiring local knowledge about a rapidly developing city you’ve just moved to, in this day and age, is virtually impossible.

Not only does this inconvenience residents, but also enterprise businesses operating in that area who rely on the services of mapmakers, like TomTom, to make deliveries and run their fleets of vehicles.

“Say a street in a rapidly developing city like Boise has 10 houses on it, and each of those 10 houses receives 10 deliveries a year, which are made by our customers. That’s about 100 times a year that they're calling on our map service, and if this street isn’t accurately mapped, we're not able to fulfill our responsibility, costing them precious time and money,” says Saul Nochumson who leads product development as part of TomTom’s Community and Partnership team.

It can be frustrating to expect a delivery, and then find it being returned or delivered to a neighbour because your address wasn’t accurately displayed on a digital map. Fresh location data is also critical when it comes to ensuring that maps can guide their users around obstacles, such as construction sites or road closures.

With the world changing so rapidly, mapmakers must determine where exactly these changes are happening, so these areas can be given special attention and mapped accordingly.

Identifying areas that need attention

TomTom uses a multi-source approach to detect changes in the world that need to be reflected on its maps, including data from survey vehicles, GPS traces, community input, governmental sources and vehicle sensor data among others. This doesn’t just ensure accuracy of maps, but also that locations such as Boise don’t slip off mapmakers’ radar.

TomTom also relies on “local intelligence”, or several regional sourcing specialists who monitor factors such as population growth and migration patterns across states to predict which areas are "booming” and require more attention.

“Using city-level, sometimes even county-level analysis, we’ve seen Boise becoming very popular for West Coast migration, making it an important spot for us,” says Peter King, who leads work on sourcing operations for the western half of the USA in TomTom’s map unit.

The population boom in Boise has led to subsequent urban development, requiring mapmakers to take note and ensure digital maps reflect these changes quickly and accurately.

Government data can also make for a helpful resource. For example, every month, the US Postal Service adds new addresses — now receiving mail — to its records. The monthly address update is another useful source to alert mapmakers about potential changes in the world that need to be mapped.

“The US government actively tracks migration from state to state, and individual municipalities also provide us with sources to feed into our maps,” says King. “In this case, we have been working with the state of Idaho for several years. When they released state-wide geographical data a little over two years ago, we were able to zoom in on Boise and compare the localities with what our maps recognized. As the speed of development increased, so did our focus on the area.”

Once these areas have been identified, the question about how to edit the map arises. While mapmakers generally automate map editing to make it a smooth process, it’s not always the best choice. It might not work as well in areas that develop rapidly, like Boise, as it does in what King calls “maintenance geographies” — established areas like New York City (NYC) or Amsterdam where the volume of map changes is much lower.

Where automation fails

Automation enables changes to be added to the base map without much human intervention. Essentially, new location data containing additions, modifications or deletions to the map comes as a data set, after which it’s lumped together and added onto the current map.

Once the data has been ingested, the map can then be cleaned up for minor inaccuracies such as misspelled street names. This works reasonably well in a city like NYC, which is already more or less established. Changes usually amount to the closure of a road or opening of a new shop at the most.

However, King explains, a map is like a patchwork quilt of data — made up from several different sources that vary significantly from each other in terms of quality but can work together with careful crafting.

To lump together sources and automate ingestion of this data by the map without analyzing the quality of each source would mean risking the degradation of data and sacrificing quality. At the same time, examining each new change for quality makes the process painfully slow, causing valuable source material to be left unused or get so old it’s no longer useful.

One step closer

To address the downsides of automation and help update maps for boomtowns quicker than manual processes allow, King and his colleagues created a new tool. Known as the Proactive Sourcing tool (PAS), it compares the incoming data with changes against the data currently being reflected on the map.

To put it simply, if every delivery from a source is compared to the original base map, there will always be a lot of differences, TomTom Regional Sourcing Specialist Thomas Byker tells me. So, PAS takes the newest data from a source and compares it against the last delivered version, and then you only see the smaller changes that the source provider has added within that time period. This means that the editing process can remain hyper focused on the changes that are freshly happening in a specific area.

This new approach promises faster returns than complete automation. When it comes to accuracy, maps updated by PAS using source material can only be as accurate as the source data itself, which is highly variable. In rapidly growing suburban areas especially, obstructions like tree cover combined with mountainous topography can make it difficult to quickly assess the quality of source data, according to TomTom Regional Sourcing Specialist Kurt McClure.

So, while PAS could help keep up with how fast cities like Boise expand, there is still scope to address accuracy. As it turns out, that’s possible using another in-house map editor.

A collaborative approach to map editing

Since October 2020, King’s team has been using Vertex, a visual map editor designed to ensure both freshness and overall quality of map data for TomTom and its partners.

“We wanted to take a more proactive approach to map editing. We saw the need for a tool to process numerous high-quality leads and sources at a faster speed. So, we provided a semi-automated solution that empowers mapmakers instead of having them depend on automated processes, especially in high-growth areas,” says Nochumson, who manages the day-to-day development of the tool.

Using a combination of local knowledge, aerial imagery and probe data, Vertex automatically proposes map updates to human editors — who then have the option to accept or reject them.

King’s team already has data from countless different sources made available for ingestion by the PAS tool. By importing it into Vertex as an editable layer, this data can act as a base for the proposed changes.

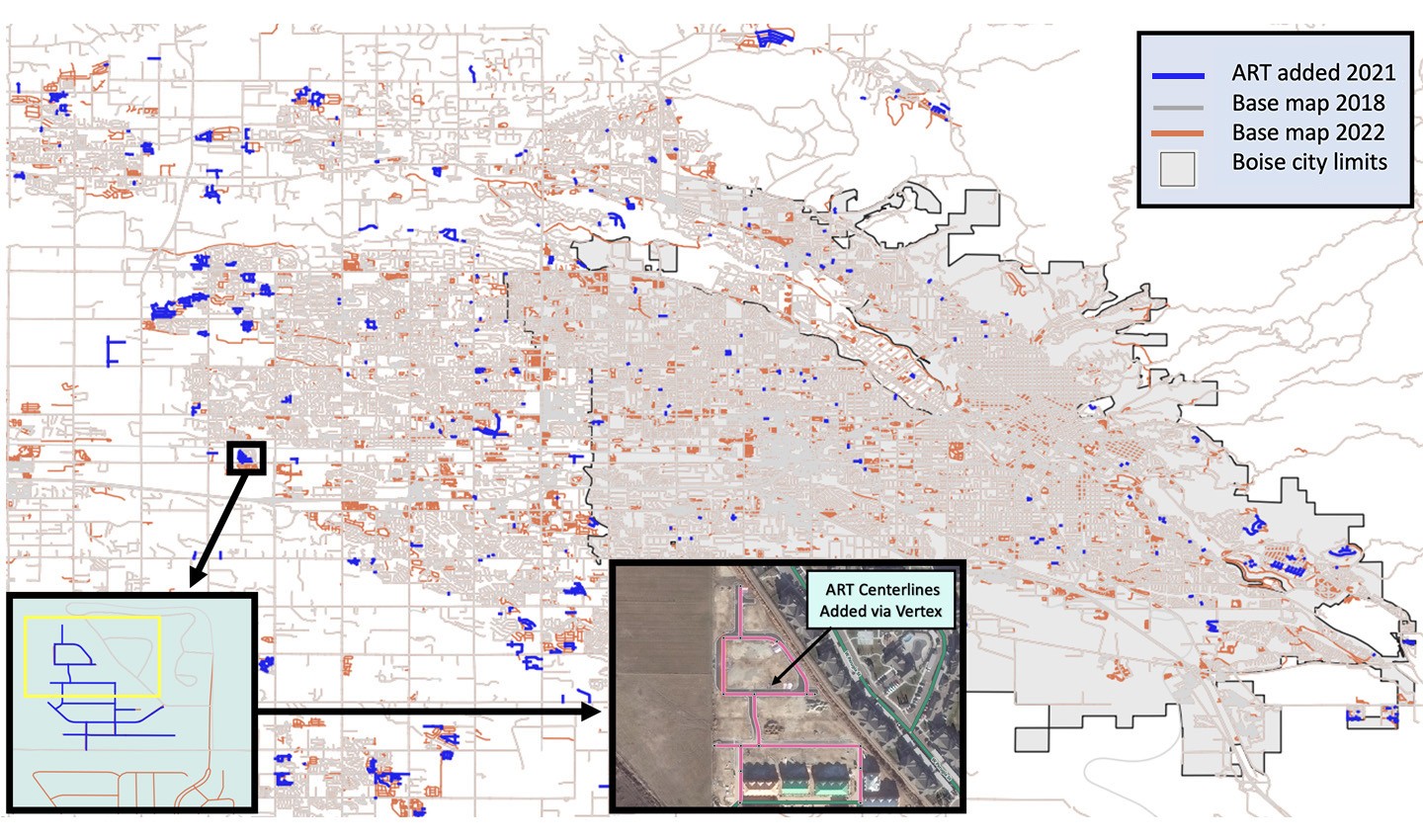

Think of it as a dish with ingredients sourced from different places. Map data prepared by PAS, when entered in what is called the Automated Road Tool (ART) layer in Vertex, results in map changes for editors to consider. Editors can also adjust the dish to their taste, by incorporating elements like missing or incorrect street names.

In a little over a year, the ART has generated over 50,000 kilometers of new road updates for editors to consider, not limited to Boise, but also including other similar geographies like Denton, Texas. This is a vast improvement from complete automation, the method mapmakers relied on earlier, which risked maps going stale in areas like Boise due to the varying quality of source data.

Boise has been developing rapidly over the past few years. Using the ART layer in Vertex, TomTom mapmakers can make map edits in a much easier and faster way.

According to TomTom Senior Project Manager David Salmon, Vertex allows for a faster turnaround due to lower barriers to entry. “We can now do away with weeks and weeks of editing training, wherein we had to prepare the map to automatically ingest not just source data, but also specific attributes such as POI data or lane attribution on roads. By adding the ART layer in Vertex, anybody within TomTom can contribute to map freshness. And since the sourcing operations team is instantly alerted of this change, they can get in the data much faster, improving the map editing cycle.”

Of course, there is the question of how efficient this process really is if each proposed change needs to be manually approved. As Salmon sees it, the updates proposed by ART are much smaller in volume than the large-scale updates made by automated processes in maintenance geographies.

“In a single city like Boise, we might only be adding 10 new streets on a given day, allowing us to really focus on the minute details.”

While the process that led to its adoption might seem complex, using Vertex to keep maps fresh and accurate is as simple as it sounds.

As the world expands and several big cities fall prey to housing crises, people are increasingly choosing to migrate to smaller towns. Using this map editing technique, TomTom mapmakers can help them make informed decisions about where to build their new life, and easily find their way around their new city.

People also read

)

How routing algorithms prioritize safety over speed in rural Finland

)

Meet the trailblazing women that made some of the world’s most important maps

)

The battle for quality maps

* Required field. By submitting your contact details to TomTom, you agree that we can contact you about marketing offers, newsletters, or to invite you to webinars and events. We could further personalize the content that you receive via cookies. You can unsubscribe at any time by the link included in our emails. Review our privacy policy.